Here is a brief overview of what you will learn in this book.

Part 1: Fundamental Concepts

In the first part, you will be introduced to the LibGDX

library, and build a custom framework on top of this library to simplify

creating a variety of games.

Chapter 1: Getting Started with Java and LibGDX

This chapter explains how to set up BlueJ, a Java development

environment, which is chosen for simplicity and user-friendliness. Instructions for setting up the LibGDX software library are given, and a

visual “Hello, World!” program is presented (which displays an image of the

world in a window).

Chapter

2: The LibGDX Framework

This chapter begins by discussing the overall structure

of a video game program, including the stages that a game program progresses

through, and the tasks that must be accomplished at each stage. Many of the

major features and classes of the LibGDX library are introduced in the process

of creating a basic game called Starfish Collector. This game is a recurring

example throughout the book: features will be added to this project when

introducing new topics.

Chapter

3: Extending the Framework

In this chapter, you’ll start with one of the core LibGDX

classes that represents game entities, and create an extension of this class to

support animation, physics-based movement, improved collision detection, and

the ability to manage multiple instances of an entity using lists.

Chapter

4: Shoot-em-up Games

This chapter demonstrates the power of the framework you

have created by using that framework to make an entirely new game: Space Rocks,

a space themed shoot-em-up game inspired by the classic arcade game Asteroids.

Chapter

5: Text and User Interfaces

In this chapter, you will learn how to display text,

create buttons that display an image or text, and design a user interface using

tables. First, you will be introduced to these skills by adding these features

to the Starfish Collector game from Chapter 3. Then you will build on and

strengthen these skills while learning how to create cutscenes (sometimes

called in-game cinematics) that provide a narrative element to your games. In the final section, you will create a visual novel style game called The

Missing Homework, which focuses on a story and allows the player to make

decisions about how the story proceeds.

Chapter

6: Audio

In this chapter, you will learn how to add audio elements

- sound effects and background music - to your game. First, you will be

introduced to these topics by adding these features to the Starfish Collector.

Then you will build on these skills by a musical

rhythm-based game called Rhythm

Tapper, in which the player presses a sequence of keys indicated visually

and synchronized with music playing in the background.

Part 2: Intermediate Examples

With the solid foundation in the fundamental concepts

and classes in LibGDX and the custom framework you developed and refined in

Part 1, you are now prepared to create a variety of video games from different

genres, each featuring different mechanics.

Chapter

7: Side-Scrolling Games

In this chapter, you will create a side-scrolling action

game called Plane Dodger, inspired by modern smartphone games such as Flappy

Bird and Jetpack Joyride. Along the way, you will learn how to create an

endless scrolling background effect, simulate gravity using acceleration

settings, and implement a difficulty ramp that increases the challenge to the

player as time passes.

Chapter 8:

Bouncing and Collision Games

In this chapter, you will create a ball-bouncing,

brick-breaking game called Rectangle Destroyer, inspired by arcade and early

console games such as Breakout and Arkanoid. New features that will be

implemented in this game include moving an object using the mouse, simulating

objects bouncing off of other objects, and creating power-up items that the

player can collect.

Chapter

9: Drag and Drop Games

In this chapter, you will learn how to add drag and drop

functionality to your games, and create a new class containing the related

code. To demonstrate the flexibility of this new class, you will create two new

games that make use of this class. The first will be a jigsaw puzzle game,

which consists of an image that has been broken into pieces and must be

rearranged correctly on a grid. The second will be a solitaire card game called

52 Card Pickup, where a standard deck of playing cards must be organized into

piles.

Chapter 10:

Tilemaps

This chapter will explain how to use Tiled, a

general-purpose map editing software program that can be used for multiple

aspects of the level design process. Then you will create a class that allows

you to import the data from tilemap files into the custrom framework you have

developed. This knowledge will be used to improve two previous game

projects: for the Starfish Collector

game, you will design a maze-like level (using rocks for walls) and add some

scenery, while for the Rectangle Destroyer game, you will design a colorful

layout of bricks.

Chapter

11: Platform Games

In this chapter, you will learn how to create the

platform game Jumping Jack,

inspired by arcade and console games such as Donkey Kong and Super Mario Bros.

New concepts introduced in this chapter include game entities with multiple

animations, platform physics, using extra actors as "sensors" to

monitor the area around an object for overlap and collision, jump-through

platforms, and key-and-lock mechanics.

Chapter

12: Adventure Games

This chapter features the most ambitious game project in

the entire book: a combat-based adventure game named Treasure Quest, inspired

by classic console games such as The Legend of Zelda. This game uses new

features such as enemy combat with two different types of weapons (a sword and

an arrow), non-player characters (NPCs) with messages that depend on the state

of the game (such as the number of enemies remaining), and an item shop

mechanic.

Part 3: Advanced Topics

This final part of the book contains some additional

optional features that can be added to many of the previous projects, and some game projects and involve advanced algorithms and graphics.

Chapter

13: Alternative Sources of User Input

This chapter will explore two alternative sources of user

input: gamepad controllers and touch-screen controls. In particular, you will

add these alternative sources of user input to the Starfish Collector game that

has been featured in previous chapters.

Chapter 14:

Maze Games

In this chapter, you will learn how to create the

maze-based game Maze Runman, inspired by arcade games such as Pac-Man and the early

console game Maze Craze. The main new concepts in this chapter are algorithms

for generating and solving mazes.

Chapter 15:

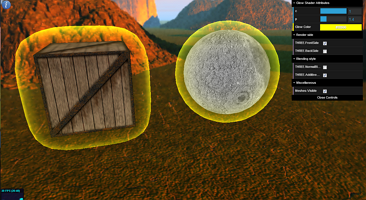

Advanced 2D Graphics

In this chapter, you will learn two techniques for

incorporating sophisticated graphics into your projects. The first topic is

particle systems, which can create special effects such as explosions, which

will be incorporated into the Space Rocks game in place of spritesheet-based

animations. The second topic is shader programming, which manipulate the pixels

of a rendered image to create effects such as blurring or glowing, which will

be incorporated into the Starfish Collector game.

Chapter

16: Introduction to 3D Graphics and Games

This chapter introduces some of the 3D graphics

capabilities of LibGDX and the concepts and classes necessary to describe and

render a three-dimensional scene. You’ll create the game Starfish Collector 3D, a

three-dimensional version of the Starfish Collector game introduced at the

beginning of the book.

This final chapter presents a variety of steps to

consider as you continue on in game development. Among these, you’ll explore

working on additional projects, learning skills in related areas, and bringing

your games to a wider audience. Along the way, the chapter presents lists of

resources of all types, and general advice for many situations.